Problem

I was contacted by a user asking if it was possible for his team to be able to execute specific SQL Agent jobs and check the job history. This was made possible by creating a SQL authenticated login and changing the job owner to this new account.

But this got me thinking about who actually has permissions when executing SQL Agent jobs. For example, who actually needs the SELECT\INSERT etc. permissions when a Powershell script is run using SQL Agent under a proxy account? Does the Job Owner or the RunAs account need access to the package for a SSIS job? I was surprised by the results and the security hole that can be opened (which I will reveal at the end).

The test involved running an INSERT statement into a table using a TSQL, Powershell and SSIS job step (as these are pretty much the only steps I use). The code to create the table is...

CREATE DATABASE [JobOwner_Perms_Test]

GO

CREATE TABLE [dbo].[JobOwner_Perms_Test] (

[Col1] int NOT NULL,

[Col2] varchar(max) NOT NULL,

[LoginName] varchar(max) NULL DEFAULT (suser_sname()),

[User_Name] varchar(max) NULL DEFAULT (user_name()))

GO

CREATE TABLE [dbo].[JobOwner_Perms_Test] (

[Col1] int NOT NULL,

[Col2] varchar(max) NOT NULL,

[LoginName] varchar(max) NULL DEFAULT (suser_sname()),

[User_Name] varchar(max) NULL DEFAULT (user_name()))

TSQL Job Step

I created two jobs, the first with the job owner as a sysadmin and the second with the job owner as a non-sysadmin but with permissions to INSERT into the table.

Both jobs executed successfully, with the sysadmin account job using the credentials of the SQL Agent service and the non-sysadmin account using job owner credentials.

I was surprised that the RunAs option, which allows a proxy account to be used, wasn't accessible for the TSQL job step. I was further surprised that there was a capability for sysadmin users to declare the job step to be able to be run under another users context which is found under the Run As User on the Advanced tab.

Setting this to a valid user allows that account to be used as a proxy for the sysadmin account (where col1 = 3)(although it doesn't have any affect when set for a non-sysadmin account). Not sure why the RunAs option in the general tab doesn't allow this...

POWERSHELL job step

For the Powershell test, I used the INVOKE-SQLCMD cmdlet to run the INSERT statement.

Windows

INVOKE-SQLCMD -ServerInstance localhost -Database JobOwner_Perms_Test -Query "INSERT INTO [dbo].[JobOwner_Perms_Test](Col1, Col2) VALUES (n, 'XXXX')"

SQL

INVOKE-SQLCMD -ServerInstance localhost -Database JobOwner_Perms_Test -Username

There were quite a few tests to consider. For example, whether the job owner is a sysadmin or not, whether a proxy account is used and if the Powershell string specifies whether to use Windows or SQL authentication.

The first bunch of tests used SQL Server Agent Service as the RunAs value...

With the following results....

First off, all the jobs with a job owner of non-sysadmin failed with the error explaining that non-sysadmin accounts need to use a Proxy account to run.

The results show that using the INVOKE-SQLCD cmdlet with windows authentication will use the SQL Agent service account to perform DML actions while the account specified using the cmdlets -Username and -Password parameters will be used for SQL authentication.

Next up was running the cmdlet using proxy accounts, one that was a sysadmin and another that had minimal permissions on the object.

The outcome (see screenshot below) shows that using windows authentication within the cmdlet will use the proxy account for the DML. Using SQL authentication in the cmdlet uses the accounts specified under the -Username and -Password parameters. One surprising result of these tests was that a Powershell script can be executed in SQL Agent without either the Job Owner or Run As accounts having access to msdb or the target database. All that's needed is the SQL Authenticated user to have access to the object being executed.

What errors can be received? If the Proxy account doesn’t have access to the database or doesn’t have permissions to insert into the table, the following errors will be returned...

The INSERT permission was denied on the object 'JobOwner_Perms_Test', database 'JobOwner_Perms_Test', schema 'dbo'The same goes when using SQL authentication and the SQL accounts don't have access\permissions.

The error information returned by PowerShell is: 'Cannot open database "JobOwner_Perms_Test" requested by the login. The login failed. Login failed for user '\ProxyNoSysadminLogin'

SSISDB Job Step

SSIS jobs running in SQL Agent add further complications when it comes to the permissions. Aswell as having the Job Owner and RunAs account, there's an option for “Log on to the server” which gives the choice of Windows or SQL authentication but as far as I can tell, only allows Windows.

If the package is stored in the SSISDB catalogue, then permissions are required on the SSISDB database. Finally, the package itself may be connecting to a database using a connection manager within the package.

To test, I created two packages that both ran an execute SQL task, which executed a stored procedure to INSERT a row into a table. The first package used Windows Authentication within it to connect to the database. In the second package, I created two parameters that are passed into the package to be used in an expression to build a connection string that uses SQL Authentication to connect to the database.

After A LOT of testing, with many different scenarios, I made the following observations...

- The job owner takes no part in setting the permissions.

- If the job owner is not a sysadmin, a proxy account is needed for the SSIS job step.

- The account in the Run As option within the job step is the one that executes the package and needs permissions in the SSISDB catalogue to do this.

- When the package uses a connection with Windows Authentication, the account in the Run As option is used and needs permissions for whatever that step does.

- When the package uses a connection with SQL Authentication, the account specified within the connection manager in the package needs permissions for whatever that step does.

Security Hole

The reader may've already spotted the problems that can arise with running SQL statements in SQL Agent. A combination of over privileged accounts coupled with a SQL Agent jobs can be give some nasty results.

Many places I have worked at have set all SQL Agent jobs to be owned by SA and use the SQL Agent service account as the Run As option in the job step. Taking the TSQL job step as an example, lets run through the following scenario....

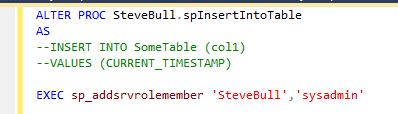

A super user called Steve Bull requests for a stored procedure he has created in his own schema in a user database to be executed every night, which adds a row into a table. The table contains a datetime column and a second, DEFAULT, column that adds the users LOGIN name.

The DBA creates the job, setting the owner as SA, and informs Steve that it will be executed every night from tonight.

This works fine. Steve checks the table and can see that the user executing the SQL Agent job is the a the SQL Agent service account, presumably with sysadmin privileges.

Steve then goes and alters the stored procedure and waits for the nightly SQL Agent job to run.

Which leaves Steve as a sysadmin on the instance.

Conclusion

Setting the correct permissions within SQL Agent is essential when creating jobs. Using highly privileged accounts to execute jobs can be exploited, leading to the possibility of a user being able to elevate their permissions and being able to access data that they shouldn't be able to see.